Research

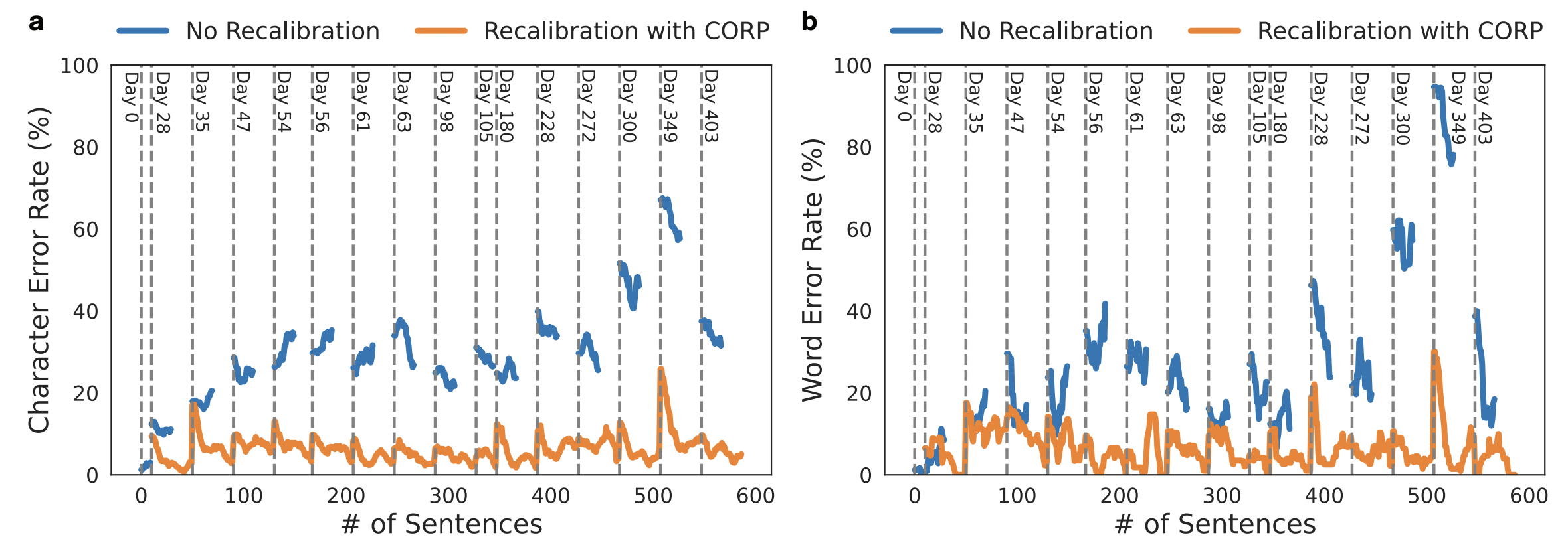

B-CORP: Bayesian Improvements on Continuous Online Recalibration with Pseudolabels

Brain–computer interfaces (BCIs) must continuously adapt as neural signals drift over time. The CORP algorithm addresses this problem by recalibrating a pretrained decoder using pseudolabels derived from language model rescoring, but it does so in an ad hoc way—selecting a single best hypothesis without accounting for uncertainty. We propose a simple reinterpretation of CORP as an approximate Bayesian filtering procedure (B-CORP), where recalibration corresponds to updating decoder parameters in response to evidence about likely outputs. This perspective clarifies that CORP effectively performs coordinate ascent on a joint likelihood over both parameters and pseudo-labels, discarding the variability in the hypothesis space that could provide useful information. Building on this insight, we introduce a marginalized version of CORP that replaces the hard pseudo-label with a soft weighting over the top candidate hypotheses. This small change transforms the update into a smoother, lower-variance learning rule that better captures uncertainty in the pseudo-label distribution. In a simple classification setting with drifting features but stable class probabilities, this approach leads to more stable adaptation and improved accuracy. More broadly, this framework provides a principled link between pseudolabel–based recalibration methods and online Bayesian inference, suggesting new strategies for BCI decoders that leverage uncertainty rather than suppressing it.

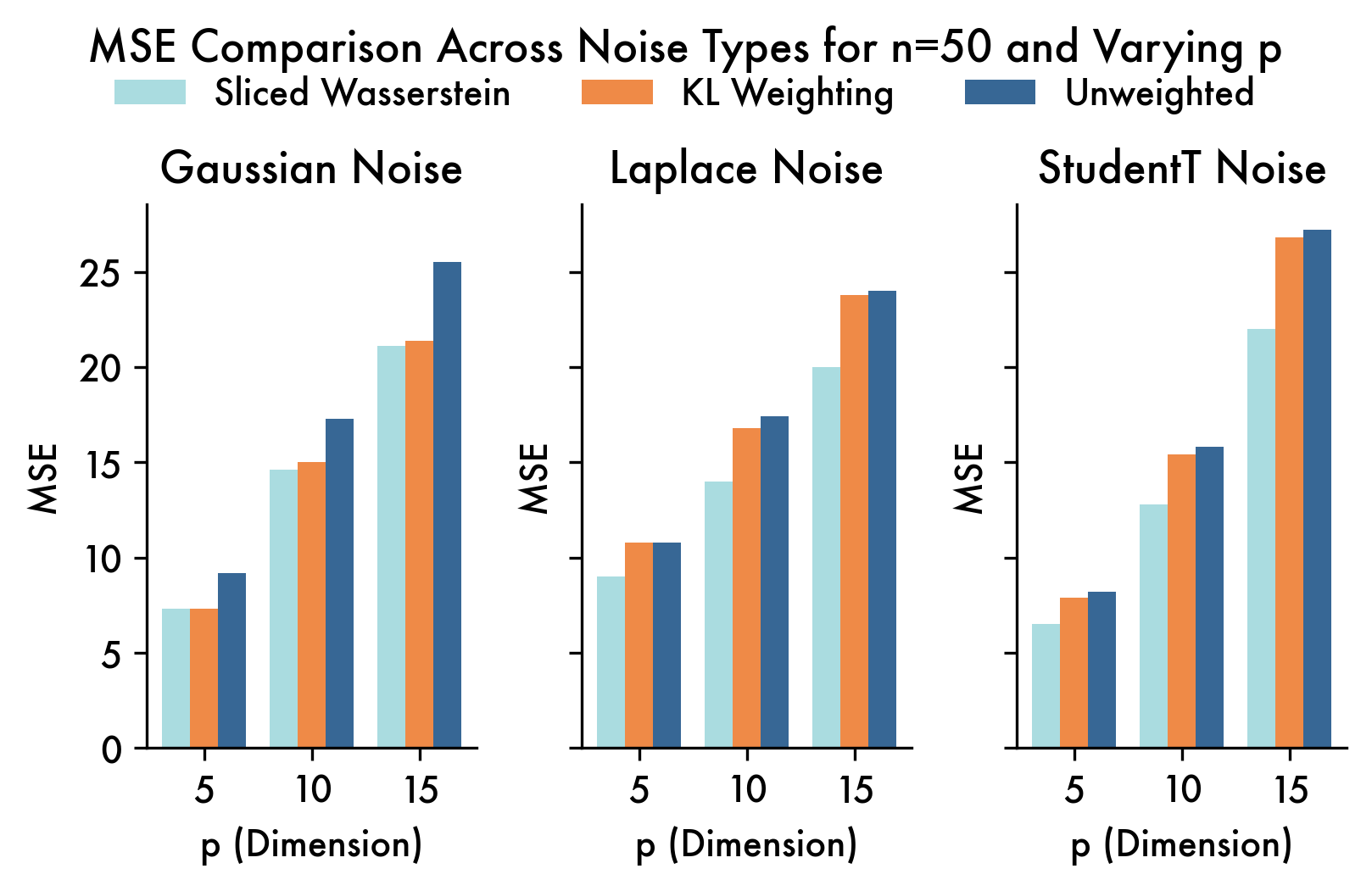

Robustness via Sliced Wasserstein Pooling

Since much of the covariate shift that occurs in brain computer interface data is due to movement of the electrode array, we propose a method for training robust neural networks by aligning the spaces of the covariates observed on a particular day according to their Sliced Wasserstein distance. The method can be calculated efficiently and improves performance on real world intracortical brain computer interface data.

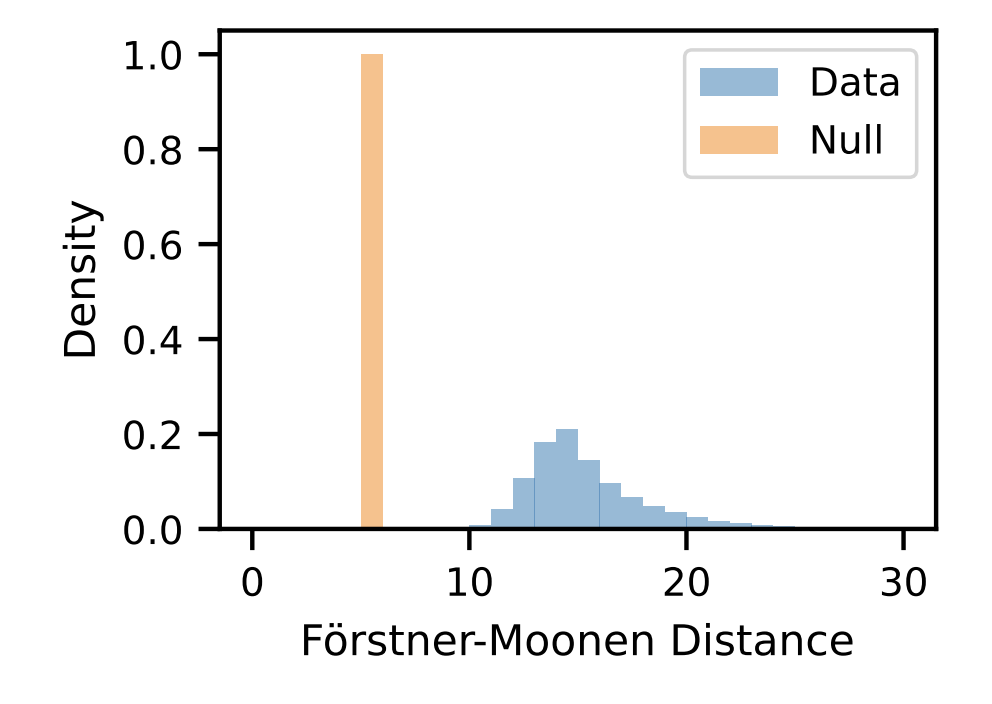

Bayesian Nonparametric Perspective on Mahalanobis Distance for Out of Distribution Detection

Bayesian nonparametric methods are naturally suited to the problem of out-of-distribution (OOD) detection. However, these techniques have largely been eschewed in favor of simpler methods based on distances between pre-trained or learned embeddings of data points. Here we show a formal relationship between Bayesian nonparametric models and the relative Mahalanobis distance score (RMDS), a commonly used method for OOD detection. Building on this connection, we propose Bayesian nonparametric mixture models with hierarchical priors that generalize the RMDS. We evaluate these models on the OpenOOD detection benchmark and show that Bayesian nonparametric methods can improve upon existing OOD methods, especially in regimes where training classes differ in their covariance structure and where there are relatively few data points per class.

Collaborators: Randolph W. Linderman, Yiran Chen and, Scott W. Linderman

Recipe Recommender with Graph Neural Networks

Graph neural networks (GNNs) have become a popular approach for modeling graph-structured data in recent years. By treating the flavorgraph as a network and using GNNs, we can leverage the rich flavor information to predict new edges between ingredients, indicating potential flavor pairings or substitutions. This project was featured by the CS224W team as a best project in a class of over 500 students.

Collaborators: Will Shabecoff and Kushagra Gupta